6. Sampling and Observers¶

6.1. Primer on Monte-Carlo Integration¶

6.1.1. Average value method¶

The integrals encountered in optical ray-tracing are exact but difficult to evaluate analytically for most physically relevant scenes. Monte-Carlo integration is a technique for approximating the value of an integral by simulating a random process with random numbers.

The simplest form of Monte-Carlo integration is the average value method, which starts by considering the integral

The average value of the function \(f(x)\) over the domain can be expressed in terms of the integral over the same domain.

Therefore, through manipulation we can express our integral in terms of the average of \(f(x)\). We can measure \(<f(x)>\) by sampling \(f(x)\) at \(N\) points \(x_1\), \(x_2\), …, \(x_N\) chosen randomly between \(a\) and \(b\).

\(p(x_i) = 1/(a-b)\) is the uniform distribution we are sampling from. For a rectangular pixel in a camera the sampling distributions would be uniformly distributed 2D points in the square area \(A\) and 3D vectors in the hemisphere \(\Omega\).

In general, the standard error on the approximation scales as \(1/\sqrt{N}\) with \(N\) samples.

6.1.2. Importance Sampling¶

The average value formula works well for smoothly varying functions but becomes inefficient when the integral contains steep gradients or a divergence, for example, a bright point light source. The standard error on the integral estimator can become large in such circumstances.

We can get around these problems by drawing the sample points \(x_i\) from a non-uniform probability density function (i.e. higher density for regions with stronger emission). Formally, \(p(x_i)\) is defined as

where \(w(x)\) is the weight function describing the distribution of sampling points. \(p(x)\) has the same functional shape as \(w(x)\) but has been normalised so that its integral is 1. For uniform sampling, \(w(x) = 1\) and \(p(x_i) = 1/(b-a)\) recovering the average value formula.

For the general case function \(w(x)\) the estimator for the integral is now

This is the fundamental formula of importance sampling and a generalisation of the average value method. It allows estimation of the integral \(I\) by performing a weighted sum, which can be weighted to have higher density in regions of interest. The price we pay is that the random samples are being drawn from a more complicated distribution.

Importance sampling exploits the fact that the Monte-Carlo estimator converges fastest when samples are taken from a distribution p(x) that is similar to the function f(x) in the integrand (i.e. concentrate the calculations where the integrand is high).

6.1.3. Multiple Importance Sampling¶

It is difficult to construct a single sampling distribution that represents a physically relevant scene. Instead, the integrand of the lighting equations can be approximated as sums and products of the underlying features in a scene, such as individual light sources and geometry factors. Ideally we would sample all candidate distributions in a physical scene.

Suppose the complicated integrand in our scene can approximated by a set operations on a number of simpler underlying distributions. e.g.

Multiple importance sampling is a generalisation of the importance sampling equation which allows us to evaluate complicated integrands by simultaneously sampling multiple underlying important distributions (see Veach, E. 1998). Without going into the derivation, the integrand becomes

with sample weights given by

6.2. Observing Surfaces¶

The total power (radiant flux) measured by an observing surface is given be the integral of the incident radiance over the collecting solid angle \(\Omega\) and surface area \(A\). Therefore the observer equation is

Here, \(L_{i}(x_j, \omega_j)\) is the incident radiance at a given point \(x_j\) and incident angle \(\omega_j\) on the observing surface. \(\cos (\theta_j)\) is a geometry factor describing the increase in effective observing area as the incident rays become increasingly parallel to the surface.

The mean radiance measured by an observing surface is simply

All raysect observers measure the mean spectral radiance \(L\) as their base quantity. All other quantities of interest can be evaluated through integration over areas, solid angles and nm.

Let us now re-express this equation in terms of a general Monte Carlo estimator. The samples will be drawn from two different distributions, 2D points on the observing surface and 3D Vectors over the \(2/pi\) solid angle hemisphere. Therefore, the average value estimator would become

where \(p_A(x_j)\) is the probability of drawing sample point \(x_j\) from area \(A\) and \(p_\Omega(\omega_j)\) is the probability of drawing sample vector \(\omega_j\) from solid angle \(\Omega\).

This is the root equation being evaluated in all Raysect observers. In most cases the detector area is sampled with uniform sampling and hence \(p_A(x_j) = 1/A\) ends up cancelling \(A\) out of the equation. It is far more common to use a variety of sampling strategies for the sample directions, \(\omega_j\), and hence \(p_\Omega(\omega_j)\) is typically more important.

Let us now consider some more specialised cases.

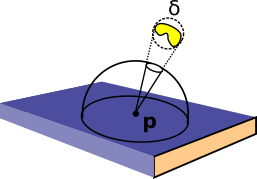

Caption: Example sampling geometry for a pixel in a camera. (Image credit: Carr, M., Meakins, A., et. al. (2017))¶

6.2.1. CCD Pixel with Uniform Hemisphere Sampling¶

Let us consider one of the most common cases for an observing surface, a rectangular pixel surface with a hemisphere of solid angle over which incident light can be collected. To calculate the Monte Carlo estimator we need \(N\) sample points and vectors. The simplest vector distribution would be the uniform distribution where all angles over \(2 \pi\) have equal probability of being sampled. The weighting distribution is

Thus the distributions normalisation constant evaluates to the area of solid angle being sampled, similar to the \(b-a\) length scale for the 1D cartesian case.

Therefore \(p(\omega)\) for uniformly distributed vectors is

The estimator for the mean radiance becomes

6.2.2. CCD Pixel with a Cosine distribution¶

As mentioned above, it is often advantageous to draw samples from a distribution with similar shape to the function being integrated. The observer equation is weighted with a cosine theta term meaning that vector samples near the top of the hemisphere are weighted much more than samples near the edge. Hence it is useful to generate vector samples proportional to the cosine distribution.

The normalisation constant, \(c\), can be evaluated by integrating \(w(\omega)\) over the domain.

Therefore \(p(\omega_j)\) for a cosine distribution would be

and the estimator becomes

Note that the cosine factors have cancelled, which makes sense since we are treating the \(\cos(\theta)\) term as the important distribution.

6.2.3. Optical Fibre¶

Consider an optical fibre with circular area \(A_f\) and radius \(r_f\). The area of solid angle over which light can be collected is a cone with half angle \(\theta_{max}\). The circular area can be sampled with uniformly distributed points, meaning \(p_A(x_j)\) would cancel out again. An appropriate vector sampling distribution is the uniform cone, where vectors are sampled uniformly over the circular solid angle centred around the z-axis with angle to z \(\theta_{max}\). Samples around the z-axis can be transformed by rotation without effecting the sample density. The weighting function is uniform and hence

The normalisation constant for the distribution is now the fractional solid angle area,

with corresponding pdf equal to

The estimator is

6.2.4. Sampling the lights¶

Arbitrary light sources and important primitives can be sampled with the projection method. The light (or primitive) in question is wrapped in a bounding sphere that just encloses the object. It is then a matter of projecting the bounding sphere into a uniform cone at the surface of the sample point. The vector from the sample point to the bounding sphere can be used to find a disk inside the bounding sphere that defines the effective solid angle to be sampled.

Caption: A complex light source sampled with the projection method.¶

6.3. Material Surfaces¶

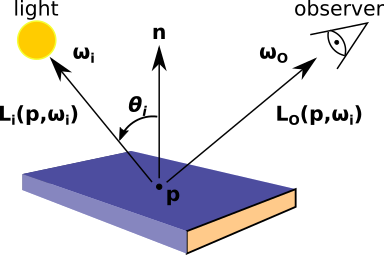

The amount of light that reaches a camera from a point on an object surface is given by the sum of light emitted and reflected from other sources.

This equation is the same as the observer equation with the addition of the bidirectional reflectance distribution function (BRDF) term \(f(\omega_i , \omega_o )\). The BRDF is a weighting function that describes the redistribution of incident light into outgoing reflections and transmission/absorption. It is commonly approximated in terms of two ideal material components, specular and diffuse reflections. Ideal specular reflection behaves like a mirror where the incoming light is perfectly reflected into one angle, \(f_s (\omega_i, \omega_o ) = \rho_s(\omega_i )\delta(\omega_i ,\omega_o )\). An ideal diffuse surface (matte paper) will evenly redistribute incident light across all directions and hence has no angular dependance, \(f_d (\omega_i , \omega_o ) = \rho_d /\pi\). Real physical materials exhibit a complex combination of both behaviours in addition to transmission and absorption.

Caption: Example geometry for light reflected from a material surface. (Image credit: Carr, M., Meakins, A., et. al. (2017))¶

6.3.1. Sampling the BRDF¶

6.4. Participating Media¶

Volume scattering, fluorescence and opacity effects.